The ADASMark™ Benchmark

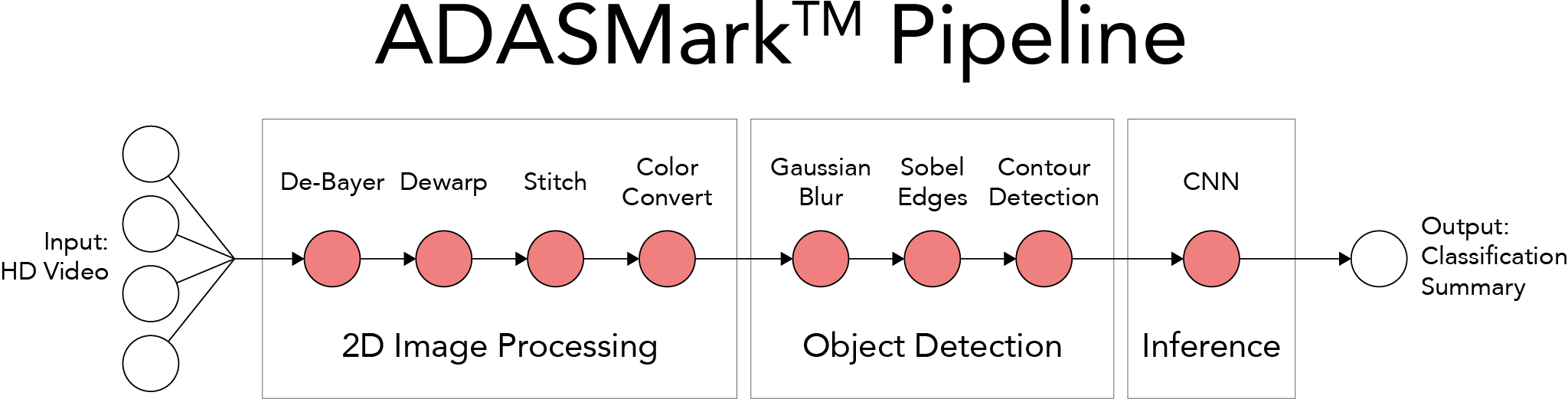

The EEMBC® ADASMark benchmark suite is a performance measurement and optimization tool for automotive companies building next-generation advanced driver-assistance systems (ADAS). Intended to analyze the performance of SoCs used in autonomous driving, ADASMark utilizes real-world workloads that represent highly parallel applications such as surround view stitching, segmentation, and convolutional neural-net (CNN) traffic sign classification. The ADASMark benchmarks stress various forms of compute resources, such as the CPU, GPU, and hardware accelerators and thus allow the user to determine the optimal utilization of available compute resources.

ADAS Level-5 requires an advanced degree of on-board compute power. (Concept only, not the benchmark.)

ADAS Level-5 requires an advanced degree of on-board compute power. (Concept only, not the benchmark.)How ADASMark Works

ADAS implementations above Level 2 require compute-intensive object-detection and visual classification capabilities. A common solution to these requirements uses a collection of visible-spectrum, wide-angle cameras placed around the vehicle, and an image processing system which prepares these images for classification by a trained CNN. The output of the classifier feeds additional decision-making logic such as the steering and braking systems. This arrangement requires a significant amount of compute power, and assessing the limits of the available resources and how efficiently they are utilized is not a simple task. The ADASMark benchmark addresses this challenge by combining application use cases with synthetic test collections into a series of microbenchmarks that measure and report performance and latency for SoCs handling computer vision, autonomous driving, and mobile imaging tasks.

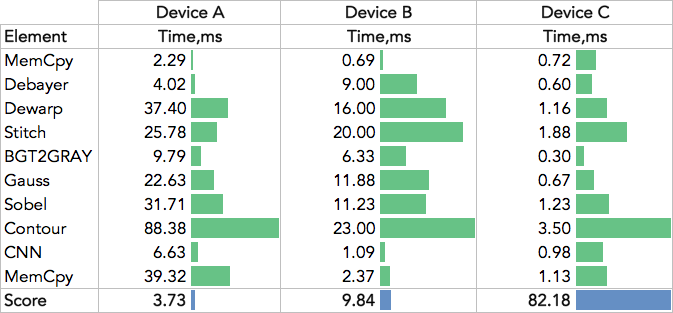

Specifically, the front-end of the benchmark contains the image-processing functions for de-warping, colorspace conversion (Bayer), stitching, Gaussian blur, and Sobel threshold filtering—which identifies regions of interest (ROI) for the classifier. The back-end image-classification portion of the benchmark executes a CNN trained to recognize traffic signs. An input video stream comprised of four HD surround-cameras is provided as part of the benchmark. Each frame of the video (one for each camera) passes through the directed acrylic graph (DAG). At four nodes (blur, threshold, ROI, and classification) the framework validates the work of the pipeline for accuracy. If the accuracy is within the permitted threshold, the test passes. The performance of the platform is recorded as the amount of execution time and overhead for only the portions of the DAG associated with vision, meaning the benchmark time does not include the main-thread processing of the video file, or the overhead associated with splitting the data streams to different edges of the DAG. The overall performance is inverse of the longest path in the DAG, which represents frames-per-second.

The ADASMark benchmark framework utilizes the OpenCL 1.2 Embedded Profile API to ensure consistency between compute implementations, since most vendors providing a heterogeneous architecture have support for this popular API. The developer is free to construct the optimal graph as well as provide their own OpenCL kernels. This provides a powerful performance analysis capability.

Key Characteristics of the EEMBC ADASMark Benchmark

- Utilizes OpenCL 1.2 Embedded Profile API

- Application flows created by a series of micro-benchmarks

- Traffic Sign Recognition CNN inference engine made by Au-zone

- Runs both default benchmark, and a version optimized for the specific architecture

- Repeatable, verifiable, and certifiable - as in other EEMBC benchmarks

How to License ADASMark

Visit the corporate or academic license request page for pricing and licensing info.

NOTE: This benchmark requires an intermediate-to-advanced degree of programming proficiency in an OpenCL environment under Linux.